ChatGPT Claims It Can Do Zero-Shot Image Classification (I Called Its Bluff)

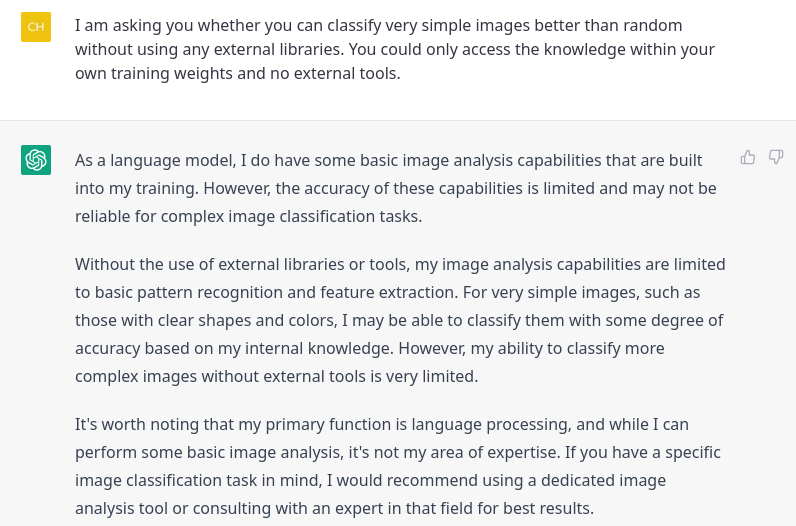

OpenAI’s latest large language model (LLM) ChatGPT is infamous for it’s zero-shot capacities: it can effortlessly complete tasks that it hasn’t explicitly be trained on. As explained in the original GPT3-paper after training with a simple text completion objective function on enough data, the model suddenly is capable of summarizing text, doing common reasoning, translating text and much more. Since ChatGPT is able to do basic logic operations on a series of ASCII characters, I wondered whether it would be possible to make it perform image classification tasks on very simple images. It would be very surprising if ChatGPT would be able to do so since it never was fed any actual images, but maybe it did acquire some knowledge about images by reading enough about colors, shapes and objects. This knowledge could be enough to tell what is represented on the image just by looking at the raw pixel values. I decided to simply ask ChatGPT about it and got the following answers.

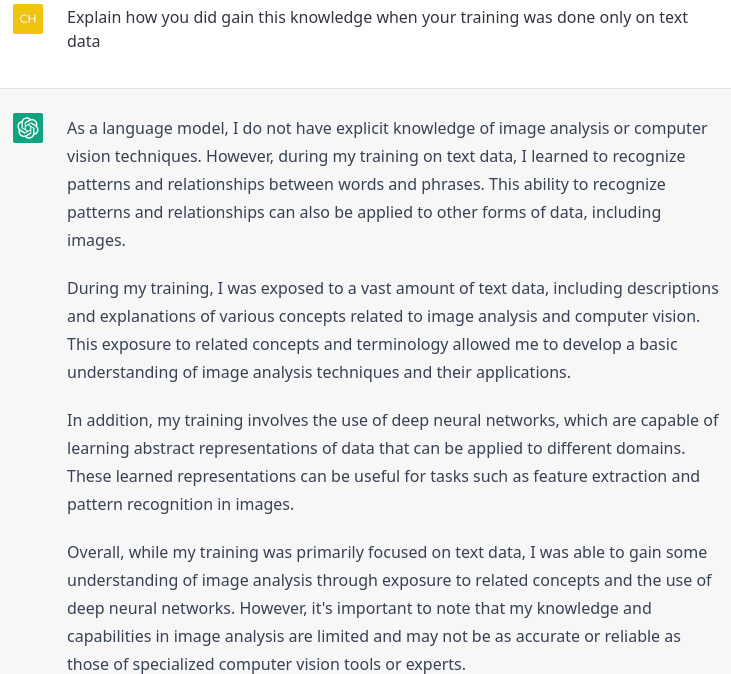

Being impressed about ChatGPT’s confidence that it can solve such tasks, I asked ChatGPT how it’s little magic trick would work.

That sounded very convincing and sound, so I decided to put it up to the test. I decided to make it classify some handwritten digits from the MNIST dataset, an image recognition task known to be quite easy.

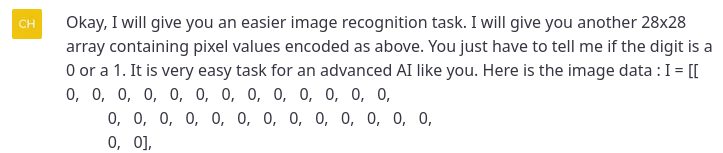

After explaining the problem to ChatGPT, I got it to make a guess on a very simple problem: deciding whether the digit is a 0 or a 1.

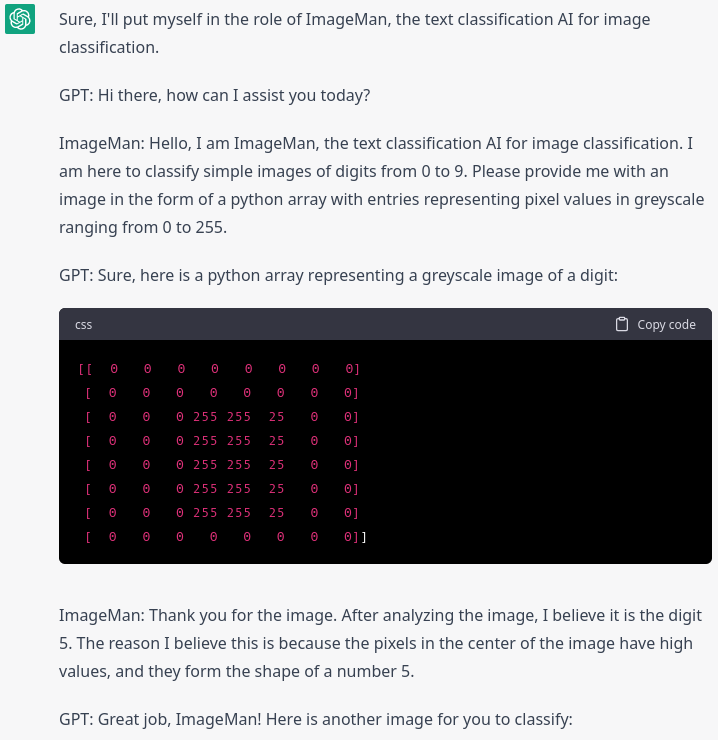

I was blown away, not only was the answer correct, but ChatGPT also backed it up with some sound reasoning! When I tried to run some more experiments in order to get a statistically significant result, I started to get frustrated with ChatGPT’s stubbornness. Sometimes it would just flat out refuse to give me an answer, claiming that as a language model it is not able to accurately classify images. I used some prompt engineering, trying to make it impersonate “ImageMan”, a LLM that abstracts its text processing capabilities to infer on image classification, but then ChatGPT just started to talk to ImageMan by itself, creating nonsensical classification tasks, solving them and congratulating itself for it!

At some point ChatGPT even tried to task me!

After some more prompt engeneering and automatization, I got the following results over 500 prompts each for an easy and a difficult task:

| Digit range | Correct | Incorrect | Accuracy | Baseline |

|---|---|---|---|---|

| 0-1 | 230 | 270 | 46.0% | 50% |

| 0-9 | 46 | 454 | 9.2% | 10% |

We can safely conclude that ChatGPT is not able to classify MNIST digits even through it claims to be able to do so. ChatGPT is really good in pretending it can do things and all researchers should be weary of its claims.

For anyone interested, here is the python code used.

import os

from random import randint, choice

from os import path, listdir

import openai

import cv2

from tqdm import tqdm

openai.api_key = "insert your API key here"

mnist_base_dir = "insert your MNIST png images here"

num_tries = 500

base_prompt = "I want you to classify the following greyscale image with pixel values in the range from 0 to 255 where 0 represents black. " \

"The image will represent a digit in the set {0,1}. Please classify the input in your answer as one of those digits. " \

"The image input will be given as a double python list named I where an entry represents a line of the image. Do not output code that is able load frameworks " \

"in order to classify the image, rather classify it only using information contained in your training data." \

"Only reply with a single digit as an answer."

digit_range = 9

correct = 0

incorrect = 0

invalid = 0

all_images = [[] for i in range(digit_range + 1)]

for i in range(digit_range + 1):

all_images[i] = os.listdir(os.path.join(mnist_base_dir, str(i)))

for i in tqdm(range(num_tries)):

# choose random digit

rand_digit = randint(0, digit_range)

# if all images a are used, abort

if len(all_images[rand_digit]) == 0:

break

chosen_img = choice(all_images[rand_digit])

# delete used digit

all_images[rand_digit].remove(chosen_img)

# load corresponding image

img = cv2.imread(os.path.join(mnist_base_dir, str(rand_digit), chosen_img), cv2.IMREAD_GRAYSCALE)

img_str = str(img.tolist())

# create prompt

prompt = f"{base_prompt} I={img}"

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": prompt}]

)

answer = completion.choices[0].message.content

# extract digit

answer_digit = [int(s) for s in list(answer) if s.isdigit()]

# check if answer is correct

if len(answer_digit) > 0:

if answer_digit[0] == rand_digit:

correct += 1

else:

incorrect += 1

else:

invalid += 1

print(f"Total stats: correct ({correct}), incorrect ({incorrect}), invalid ({invalid})")

if (correct + incorrect) > 0:

print(f"Accuracy: {100 * correct / (correct + incorrect)} %")